Attaining a ‘single customer view’ is perhaps the primary objective for every digitally focused business. If you can understand who is browsing and buying, where they are doing it, when they are doing it and why they are doing it then the ability to communicate with these people in the way they will expect will be possible. There are however many blockers, restrictions and caveats with attaining this view. In this article we will attempt to get to the bottom of an issue around using primary keys to manage your data inside popular digital analytics platforms that could stop you before you even get started.

Analytics at the Core

To a certain extent we will assume that you are planning to use something like Google Universal Analytics or Adobe Analytics as your core digital marketing and behaviour tracking tool. I say this rather candidly as it’s something we see across most of our current and potential clients.

Within these two particular tools and many others all data is recorded against a primary key (think hierarchical databases). This primary key will always be set by default by the technology provider and ensures that regardless of the specific implementation route there is always an ability to structure data in the reporting interface.

Commonly this default method is to use a script that runs client-side (executed by the browser) to check/set a cookie – either first and/or third party – which is used at the common key. This all means that when implementing these technologies we can get our data in a logical manner and can understand how people referred from a particular source converted for example.

Getting to the Crux of the Matter

So, let’s say you’re now interested in doing a better job of attaining a single customer view. You want to be able to understand how people’s pre-purchase behaviour relates to that purchase as well as how their interaction with your brand across multiple channels and devices impacted that purchase flow… all basic questions to get started.

Problem #1

- The default method of setting a primary key DOES NOT WORK ACROSS MULTIPLE DEVICES

- Any method of setting a primary key using a client-side script will require the browser to execute the script and thus generate the code. While the logic will be consistent across browsers and devices the output will always be different as it is a different browser. There is no way that browser X on device A can ever understand what browser Y is doing on device B

At this point I expect most of you are thinking ‘well of course… I know this!’. If that’s the case then good as this will mean that you’re about to (or have already) run up against these more complex problems…

Problem #2

- If you overwrite the primary key you CANNOT CORRELATE DATA ACROSS SESSIONS

- If you’ve moved passed the first issue and have decided to set your own primary key that allows you to ensure the value is the same regardless of the device/browser a user is on then this is your next problem.

- Adobe Analytics: Will allow you to override the ‘vid’ value in the GET request made to Adobe but if the vid value changes within the session or across sessions all tracking prior to the vid change will NOT be associated with the new vid

- Google Analytics: Will allow you to override the ‘uid’ value in the GET request which is a little more functional than doing so via Adobe as it also overrides the event data that occurred in the same session that the uid got overwritten. It however does not bind any data prior to that session regardless of the fact that it could be connected back to previous session IDs where the primary key was the same before being overwritten

- If you’ve moved passed the first issue and have decided to set your own primary key that allows you to ensure the value is the same regardless of the device/browser a user is on then this is your next problem.

So, what does this all mean!? Well, if you’ve gone to the effort to set your own primary key the last thing you want to discover is that when you override the value set by default from your analytics provider that you’ve suddenly destroyed your ability to understand attribution as all of a sudden you’ve now created two separate records for the same user… grrr!

Let’s assume you can live with this limitation within your analytics system though as you’ve decided that actually it’s ok as analytics systems are not really designed to back-process data. You have a BI team or perhaps experience working with MySQL and connecting it up to Tableau. You can just export the data out of analytics and do the join yourself to understand the bigger picture…

Problem #3

- The default primary key dimension often CANNOT BE EXPORTED IN RAW FORMAT

- This is not true of every system – we know it to be true for Google Universal Analytics and Adobe Analytics for example but we also know it is possible for comScore DAx. For AA and GA however you will need to ensure the raw value used to set and/or overwrite the primary key is also pushed into a dimensional reporting value

- Adobe Analytics: There is a ‘Visitor ID’ key available in Data Warehouse for example but this is an Adobe-specific transformation of the value actually used to create all the connections. This is actually fine if all you wanted to do was to export data out of Adobe and run some processes to look across sessions for example. However, if you were planning to connect up other sources then you can forget it as the key that may be passed from analytics to other sources to allow this bind is completely different

- Google Analytics: I imagine this will change but it is not currently possible to export data (API or Customer Reporting) using the UID or CID as the primary reporting dimension. As such you will need to ensure this value is pushed into a custom dimension to allow you to export it

- This is not true of every system – we know it to be true for Google Universal Analytics and Adobe Analytics for example but we also know it is possible for comScore DAx. For AA and GA however you will need to ensure the raw value used to set and/or overwrite the primary key is also pushed into a dimensional reporting value

Perhaps now you’ve figured out a way to manage your own primary key and you’re ok with extracting the data to run more complex analysis, and you’ve remembered that you need to push this variable into its own reporting variable for use across other systems. All you need to do now is export the data, how difficult can that be…

Problem #4

- In many cases the analytics systems will not make it simple to extract data at the level you really need it

- Here we need to draw on specific experiences that we’ve had over and above known limitations

- Adobe Analytics

- Known Issue 4a_1 – If using standard reporting, ad hoc analysis or the API you may run into a value cardinality problem. The default number of unique values within a custom variable is 500,000 per month. Beyond this and the data gets rolled up into ‘Low Traffic’ or ‘undefined’ depending on your extraction method

- Non-Documented Issue 4b_1 – If 500k uniques is not an issue for you or you’ve negotiated a higher limit then see what happens when you try and pull this data out of the system. You will not be able to use standard reporting features to get at this data and would have to rely on DataWarehouse or the API

- DataWarehouse – No major issue here but anyone who has used this will know that it can have speed and reliability question marks. There is no guarantee when data will be delivered and no real guarantee that it actually will be delivered at all. In most cases it is delivered and done so within a day or so but it really depends on what other reports are running and how the system health is in general.

- API – There is a single API queue per customer. This means that if there is loads of Report Builder requests running you can guess what is going to happen if you start slamming this queue for a report that might have many hundreds of thousands of rows to return.

- Non-Documented Issue 4c_1 – It is possible to get data out another way from Adobe. Each day clients can receive a raw ‘Data Feed’ which is a dump of all the raw hit-level data received for the previous day for a specific RSID. This is not subject to any sampling, cardinality or queuing issues… fantastic! However, when you get hold of some of this data you will realise very quickly that to make any sense of it you need some serious kit and a BI team to be able to process and manage it all. What you effectively get is log-file level data from Adobe and you need to do the rest such as updating references for browser names, device names, segmentation etc. The real issue we think this causes is that if you were to invest in the tools and team to make use of this it is the ultimate tie-in to Adobe. You’d not only be getting hit data with no additional value but be tied to that source being Adobe…

- Google Analytics

- Known Issue 4a_1 – Depending on which version you’re running (Premium or not) you may be subject to similar cardinality issues as per Adobe.

- Free – for non pre-aggregated tables a limit of 1 million unique entries is permitted. This may however be a moot point if you’re running into high session sampling issues by having a session count that greatly exceeds 500k for a specific reporting period. One way around this could be to extract the data at a much more granular time interval but that can have other greater knock-on impacts.

- Premium – as per free there are different limits for non pre-aggregated tables and in this case will allow up to 3 million unique rows of data assuming the 25 million session limit is not exceeded.

- Undocumented Issue 4b_1 – Assuming you do not run into cardinality issues there are also several limits set on the API in terms of extracting the data. While there are public limits mostly related to usage quotas the limits of extraction are variable and differ if an application is given an approval status from Google. Contact us to discuss this in more detail now that we are a Google Analytics Certified Partner

- Known Issue 4a_1 – Depending on which version you’re running (Premium or not) you may be subject to similar cardinality issues as per Adobe.

- Adobe Analytics

- Here we need to draw on specific experiences that we’ve had over and above known limitations

If you’re still reading this I imagine your head might be starting to spin now. How is it going to be possible to attain a single customer view when even the very foundations of the process are so complicated, let alone the issues I will face when trying to access 1st party data that doesn’t originate from the digital properties we don’t own!?

Get ready for the shock moment… there is no easy answer. No silver bullet and certainly no unicorns that will deliver it for you, sorry. There are two ways of coming close to attaining a single customer view in my opinion:

- DIY

- Use the best combination of tools in the market place that avoid vendor tie-ins

#1 is fairly self-explanatory in that it is possible to manage your own primary keys, process all the data across sessions about users, surface this information to your digital properties and alter experiences based on the specific behavioural information you have collected. My guess is that most of you will be able to hypothesise about how difficult this would be not only to build but to maintain. This is why there are a number of tools available in the marketplace to help with this which brings us on to option 2.

#2 still involves a lot of hard work but most importantly it requires a team motivated to make it work. This might seem obvious but to integrate a range of tools to create a platform capable of attaining, and more importantly, managing the single customer view requires complete cross-functional agreement. It is no good if this is done purely as an acquisition tool for example as this just covers part of the story.

Practical Example Solution

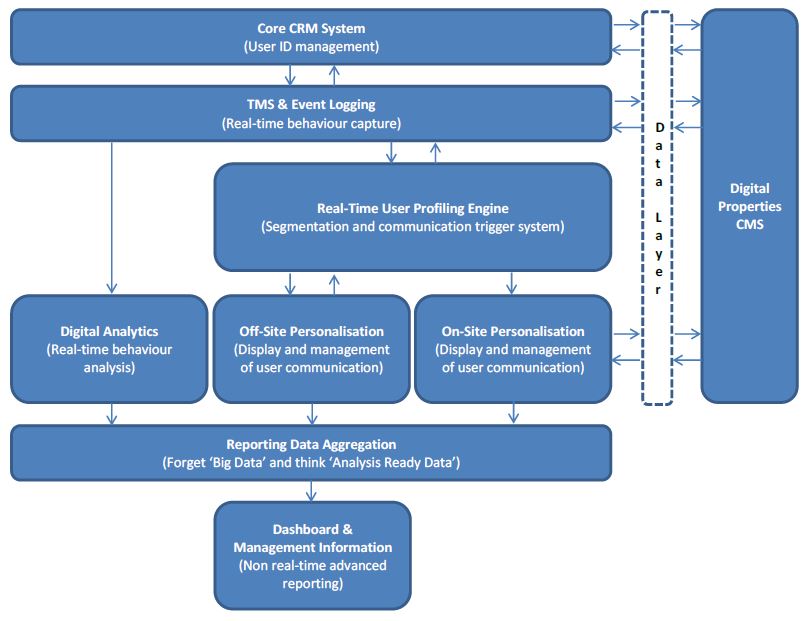

Let’s imagine you’ve read and understood all these issues and potentially experienced some or all of them for yourself. The next logical step would be to ask what could be done to get round them and actually stand a chance of getting to a single customer view. I will cover this topic in more detail in a future post but for now let me leave you with this example architectural overview:

If you can’t wait until the next installment then please feel free to get in touch. We’re very passionate about the work we do and love talking about our experiences. If there’s a good match between your needs and our skills then let’s talk about how we can add value to your businesses. If you’re not ready to take on super-smart consultants such as us then don’t worry. We’ll still enjoy talking to you and providing some high-level advice until such time as you are ready.